Mon Feb 17 2020

Efficient Data Structures for MMO Game Back-ends in Java

Article size is 14.4 kB - 18.6 MB and is a 11 min read

... and how I almost rewrote the backend in Rust, but didn't.

Java gurus beware - none of this is new news. However, it is interesting, and I ran into it recently for the first time dealing with large heaps. Most Java applications I've worked on either had data on the heap very short-lived like per-request or the heap allocation was very temporary (like batch processing).

The Context

However, for the past six months I've been working on TDWorld. TDWorld can best be described as a global-scale tower defense MMO. That's a mouthful, but that's what it is. It's a location based game where you claim buildings and connect them. For example, let's say you claim your house and then the supermarket down the street. You connect them, and if your creeps make it from your house to the supermarket you get in-game currency. The game plays on a real map - using real roads and buildings. Your enemies can take a building in between your house and that supermarket, level it up, and stop your creeps from reaching their destination. Hence, the strategy part.

The Problem

We have a few requirements. We need to be able to simulate vehicle traffic, in the form of in-game creeps, and also support queries that allow towers to efficiently find the nearby creeps to attack. On top of this we need to sync state with your phone. It's not a small task.

On top of that, we don't have a lot of money. I started with MapBox for the traffic routing and quickly racked up thousands of dollars in API requests during testing. This was a big no-no, so we now run our own instance of the routing server.

For a quick example, here's a very early version of the client that rendered an android TextureView over MapBox's SurfaceView by using reflection to grab its contents and overwrite it (Android devs will know how dirty of a hack this is). But it lets us demonstrate the game. Don't worry, I'm getting to the technical stuff soon.

Interesting Update

As of 2022 The game is now built in a custom 3D OSRM renderer on top of libgdx. The article continues with the old 2d renderer.This example is playing the game in Redwood City. Ignore the graphics. Just see how the creeps follow the roads and the towers have to shoot them. Everyone on their phone should see the same thing as you.

This demonstrates the kind of queries we need to support. For this we make heavy use of David Moten's rtree.

That's not so much what this post is about, I can write a separate post about immutable rtrees. This is about reducing memory, because oh man did we have a problem with that.

Interesting Update

As of 2022, we now use a custom grid-based geospacial index, instead of R-trees which proved to be too complicated and too much overhead.Technical Juicy Stuff

Here are all the problems I had, as of last Friday:

- Seeding was super slow. On launch, we want to have NPCs in every major city in the US (before we launch globally) proportional to the population of said city, randomly distributed from the city's center. It took almost 30 minutes to seed this data and build the indexes, which was too slow to iterate and develop on.

- Saving and restoring game state was super slow. Like a minute to checkpoint to disk and ten minutes to restore.

- We were using way too much memory. For around 300k towers and a few thousand creeps we saw heap sizes of 14gb. This could equal hundreds of gigabytes in the real world - needlessly expensive! Also, the connections themselves will take a lot of memory so can't use all resources just on the game engine.

Efficiency is important here because of scale. A poorly coded backend could mean six months of work wasted if we go down on launch day and nobody comes back to the app - and we don't have the money or time to scale horizontally. So to "get it out there" we need to have a backend that'll scale on one machine, up to a million concurrent connections, and handle tens of millions of actors (creeps) moving around concurrently. For concurrency, we shard in-memory geographically, but I'll cover this in a separate post. If we need to scale horizontally it's just a matter of building an orchestrator to move the shards between instances - and no we can't back this with a traditional database. Millions of concurrent writes with a high write concern basically mean that the app layer needs to have its own in-memory database that checkpoints to disk (and that's what we have). This is also how Redis works!

There was a point where I blamed Java for my poor performance, and I decided a trial rewrite in Rust. I figured I'd just 1:1 port the Java code and see what the performance difference was.

I ported All the structures and then the seeding code. This was a super painful two days, and I am not a Rust fan yet. I think I get Rust, it's just stressful and not so fun to write.

Anyway, the code written in Rust blew away the code in Java. It could seed the data for a thousand cities, and create all the NPC users, in seconds.

Then I realized I had forgotten to pull out my profiler. N00b mistake. A quick dig into JDK Mission Control showed a number of problems.

I had read the hot path in the Java code a hundred times and couldn't see why the seeding was so slow. The code was so trivial in fact I just didn't think of using a profiler. I suppose I thought it was Java's fault because I was fighting the garbage collector for a while, but I should have known better because switching to Shenandoah fixed that.

(By the way, Shenandoah is awesome, we went from many second pauses to never seeing more than a few hundred milliseconds STW pauses even during serialization/check pointing to disk)

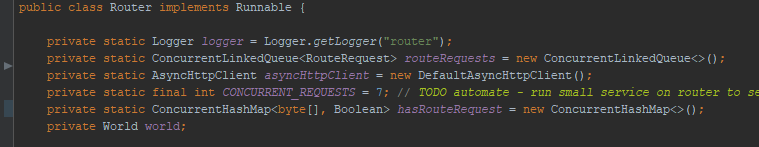

So anyway. The profiler. Here is the cause of my first problem, the seeding being slow. During seeding, we enqueue a request to route between the source and target towers - and there can be hundreds of thousands of these requests. The downstream routing server is in C++ but it can still only handle so many requests concurrently, so we queue them up in memory in the game backend using a custom task executor.

And that executor - uses a ConcurrentLinkedQueue.

So what's wrong with that? Well, every time we enqueue a request we check the count of the queued items to see if we should execute that one right away:

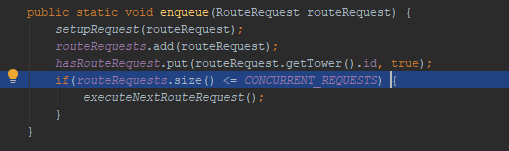

The problem is ConcurrentLinkedQueue is implemented using a linked list. We call size() on it A LOT, and each time it was iterating through the whole queue. I'm not sure why the JDK doesn't optimize this using atomics, as that would probably introduce less contention than what it's doing today. All we had to do was use an AtomicInteger to keep track of the count.

So that's #1 solved. The Java version was now faster than Rust.

So now #2

Here's where we talk about data structures, sorry.

Ok - I wrote the title of this and then realized it takes a while to actually get to what the title's about. Thanks for getting this far.

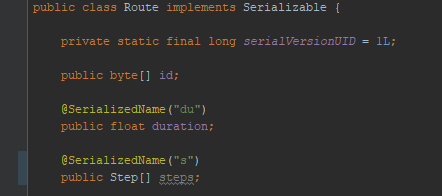

Most of what we need to store in memory is the routing information. Each route - for example between your house and the grocery store - consists of a bunch of steps.

These steps are basically objects with the properties {duration, speed, and lat/lng}. And we store A LOT of these. Whenever a creep is primed to move again, we get the next step and then update its position in the rtree.

We had originally represented geographical position information using doubles. This gives us a very high degree of accuracy - but at a cost!

Before we optimize, let's look at some stats. We can optimize forever, so we have to quantify when we're done. Let's 5gb of heap is where we'd be happy with our testing dataset.

Note that the creep/tower count is just to some info about one shard that I have running, it doesn't mean that much.

Before Optimization:

CREEPS=3934 TOWERS=263278 TICK DURATION=125.0

Size on disk: 2.322gb

Memory: 14gb

Serialization (checkpoint and app startup) Time

To Disk (nvme): 69540ms

From Disk: 10 minutes

Wow! Over a minute to save! That's not too bad, however think about the fact that it's taking that long because it's doing something intensive. The game engine will already check for how many threads are free and pause one of the shards if needed to serialize to disk - however we don't want to do that for a long time.

So first thing's first. double in the JVM world is 8 bytes. Float is 4. Also, we store a lot of Strings but don't really make use of the String class. Let's change everything to float and byte[].

Not too bad. Now let's measure what the affect was.

After changing double to float and String to byte[]

CREEPS=2910 TOWERS=263278 DURATION=202.0

Size on disk: 1.49gb

Memory: 12gb

Serialization (checkpoint and app startup) Time

To Disk: 64443ms

From Disk: 9.5 minutes

Alright, we gave up about 10 meters of accuracy (changing geographical coordinate representation) from double to float and got 2gb of ram and minor serialization improvements.

Not great.

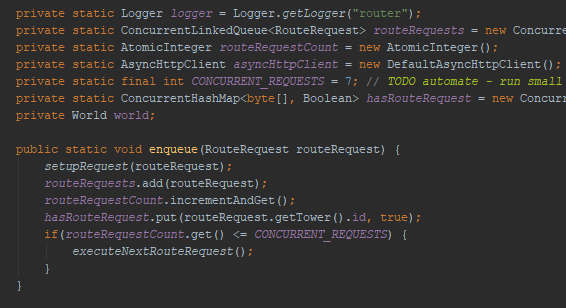

However, one thing I realized was that each Route has many Step[] arrays. Each Step just contains a few floats and a Position object now (which also has two floats).

So basically our route information is a series of floats. Each entry in that Step[] array is also eight bytes for the pointer. That really adds up. Let's get rid of those objects.

![Route Obj Using float[] Route Obj Using float[]](images/tdw-route-1.png)

Then we just need some utilities to access this newly formatted data:

So what in the Kotlin is going on here? Well, we're basically serializing our data at rest into an array. This is much more compact than a bunch of objects and is a common tactic for databases written in Java - use primitives as much as possible.

This means that this:

{

"route": [

{

"duration": 2,

"speed": 5,

"position": {

"lat": 76.4,

"lng": -170

}

},

{

"duration": 4,

"speed": 3,

"position": {

"lat": 76.2,

"lng": -170.1

}

}

]

}

Becomes:

{

"route": [

2,

5,

76.4,

-170,

4,

3,

76.2,

-170.1

]

}

Not only is this much nicer for the garbage collection it's much better for serialization in general - and not only to disk but over the network.

You'd think that the JVM is optimizing things like this already, but the best it can do is keep the data the references point to close to each other, and maybe compact the pointers.

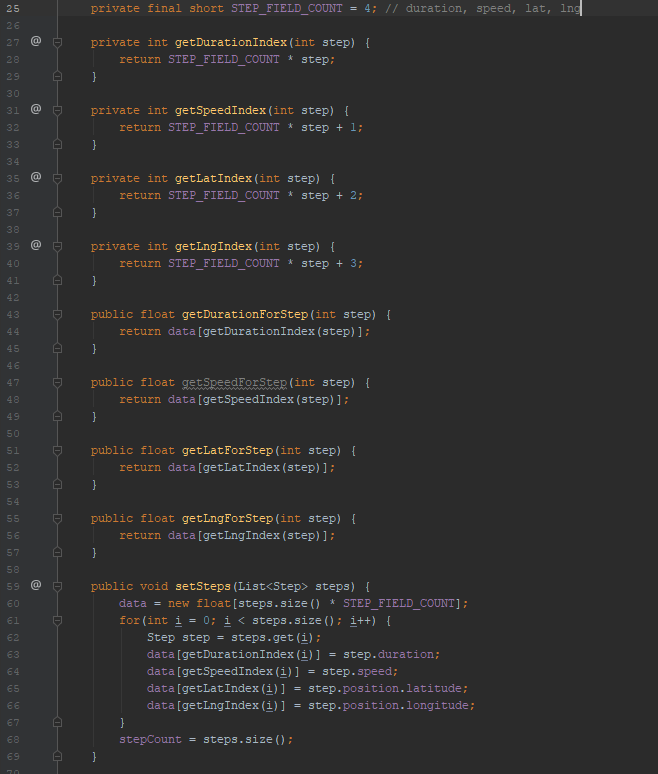

The JVM does magical things. But behold.

After redesigning the Route storage

| Before Optimizations | After | |

|---|---|---|

| Size on disk | 2.322gb | 873mb |

| Memory | 14gb | 1.8gb |

| To Disk Time | 69540ms | 4183ms |

| From Disk Time | 10 minutes | 24 seconds |

Amazing. Our shard size on disk has gone down from over two gigabytes to 873mb. Note that the app is still running in the background and at 527314 creeps the size on disk is still less than a gigabyte.

Our memory usage went from 14gb to less than two.

Startup time went from ten minutes to 24 seconds, and writing to disk went from over a minute to four seconds.

This was a super productive past couple days, and I'm very happy with the result. I'm hoping that soon we can show off the full game and prepare for launch.

Next I'll be doing a load test with simulating millions of active clients and having the backend continually keep them in sync. This is much harder and will be covered in separate blog posts. Cheers!