Sat May 13 2023

Article size is 15.5 kB and is a 10 min read

A free game I used to love to play around 2006 was NetPanzer. The only machines I had access to were free Pentium III machines,

which I threw Linux on, so that kinda limited me to games like NetPanzer and WolfET.

This was also the game where, in my young youth, learned through the chat feature how much people hated Americans :)

Recently I'd been fixing bugs and stuff in WolfET (a little in 2014, and then again recently), and wondered what was up

with NetPanzer.

The Current State of Things

The game, today, does not run. If you install NetPanzer from your repo's package manager and start it, it will crash.

If you run it from the shell, you'll see that's because it can't reach the master server, and then it segfaults.

netpanzer.info is no longer online in 2023. I found an archived forum post from 2019 of a maintainer asking for help

with the project. It seems soon after, when nobody replied, the project slowly died and went offline.

UPDATE May 9th: It seems after bugging the maintainer, about a month later, netpanzer.info is back online, and they restored the master

server. So you can play NetPanzer again! I am also working with the maintainer to run a second master server. I've already thrown up one 0.8.x

dedicated server that supports up to 100 players.

A New Map Builder?

One of the issues with the existing game is that, while you can run your own server, you can't create new maps.

The map building tool is long gone, and the asset storage is not immediately obvious. So how do we go about fixing that? Custom maps

allow people to mod the game.

One idea I've been floating around is adding the ability to build and import maps from an existing popular tool. So let's see

if we can figure out how the game assets are managed.

The first assets that are easy to extract are the game flags and icons, which are in BMP format. This gave an early

clue as to how the game assets are stored elsewhere - as raw, uncompressed, bitmaps.

We find some interesting files in a wads folder:

wads/netp.actwads/netpmenu.actwads/summar12mb.tls

... and in units/pics/pak we find many files ending in .pak files, with the unit names (like ArchHNSD.pak).

Games of this era commonly stored the assets in ".pak" files, but they all used their own format, the exception being IDTech 3 engine

games (like WolfET) which all used the same format designed by John Carmack.

NetPanzer is not an IDTech 3 game, and uses a custom binary format.

So let's reverse engineer the pak files.

The Different File Types

There are a few binary formats.

The .pak files are purely a header in binary, with the bitmap image data after.

To read the files, we simply seek through X number of bytes at a time:

const unit_contents = fs.readFileSync('units/pics/pak/TitaHNSD.pak');

const unit_buffer = SmartBuffer.fromBuffer(unit_contents);

console.log('unit version', unit_buffer.readInt32LE());

console.log('unit x', unit_buffer.readInt32LE());

console.log('unit y', unit_buffer.readInt32LE());

console.log('unit frame_count', unit_buffer.readInt32LE());

console.log('unit fps', unit_buffer.readInt32LE());

console.log('offset_x', unit_buffer.readInt32LE());

console.log('offset_y', unit_buffer.readInt32LE());

Whereas, the unit metadata is stored in /profiles as text:

hitpoints = 120;

attack = 350;

reload = 110;

range = 48;

regen = 90;

defend_range = 119;

speed_rate = 16;

speed_factor = 2;

image = "pics/menus/vehicleSelectionView/archer.bmp"

bodysprite = "units/pics/pak/ArchHNSD.pak"

bodyshadow = "units/pics/pak/ArchHSSD.pak"

turretsprite = "units/pics/pak/ArchTNSD.pak"

turretshadow = "units/pics/pak/ArchTSSD.pak"

soundselected = "mu-selected"

soundfire = "mu-fire"

weapon = "DOUBLEMISSILE"

boundbox = 40

So what about the .act and .tls files?

Well, the .act files are color palettes. It turns out that the pak files, and the .tls files, simply store offsets

into the .act files. The reduces the amount of data stored in each map and unit file. We simply store the colors once, and can

use a single 8 bit integer to index into one of the 256 colors to save disk space.

Extracting the game unit assets was quite easy. A bit of time was spent doing this myself, and then I found a file called

pak2bmp.cpp in the original code

First was to figure out the build system, which uses scons. scons uses a Python file to define the build process, like so:

def globSources(localenv, sourcePrefix, sourceDirs, pattern):

sources = []

sourceDirs = Split(sourceDirs)

for d in sourceDirs:

sources.append(glob.glob( sourcePrefix + '/' + d + '/' + pattern))

sources = Flatten(sources)

targetsources = []

for s in sources:

targetsources.append(buildpath + s)

return targetsources

The build script is a ~280 line Python script. After installing scons the build promptly fails with print not found - as the

file was written for Python 2. Luckily converting it to Python 3 was quite easy, so onward...

I have no idea how to change the default build target with scons to just build our pak2bmp.cpp tool. Eventually I realized I can

just change the default in the SContruct file:

pak2bmp = env.Program( binpath+'pak2bmp'+exeappend, 'support/tools/pak2bmp.cpp')

Alias('pak2bmp',pak2bmp)

bmp2pak = env.Program( binpath+'bmp2pak'+exeappend, 'support/tools/bmp2pak.cpp')

Alias('bmp2pak',bmp2pak)

Default(map2bmp)

This worked, however I ran into compilation issues with how the codebase was extending std::exception for the networking layer (I would later fix this, but that's for a separate post).

Eventually I decided - we don't need Networking - so after learning scons some more I simply omitted all the networking code from the build.

$ ./pak2bmp

pak2bmp for NetPanzer V 1.1

use: ./pak2bmp <filename> <output_folder> [palette_file_optional]

note: if using palette file, use only the name without extension or path

note2: even on windows the path must be separated by '/'

note3: YOU have to create the output directory

example for using palete (default is netp):

./pak2bmp units/pics/pak/TitaHNSD.pak titan_body netp

Success!

We can now extract all the unit assets with a little script:

ls units/pics/pak | xargs -i sh -c 'mkdir ./bmp/units/pics/{}'

ls units/pics/pak/ | xargs -i sh -c './pak2bmp units/pics/pak/{} ./bmp/units/pics/{}/'

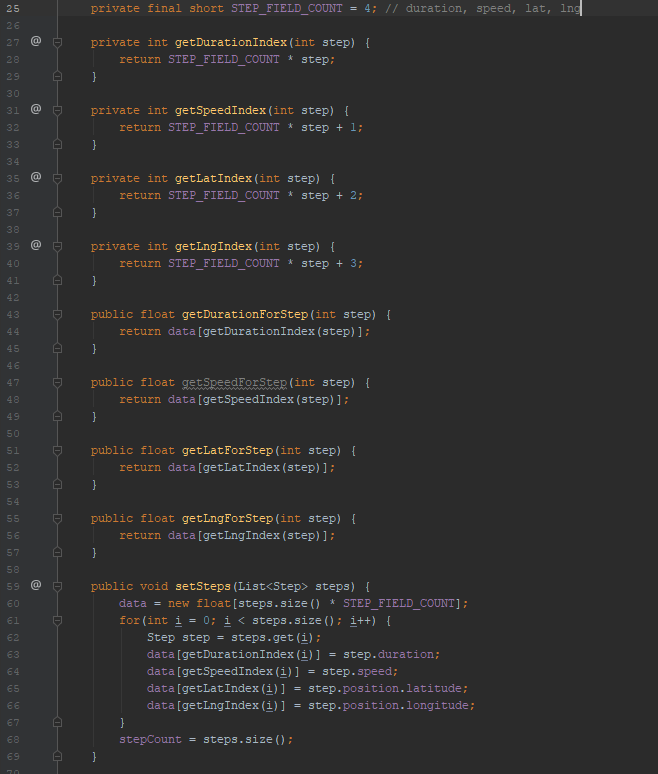

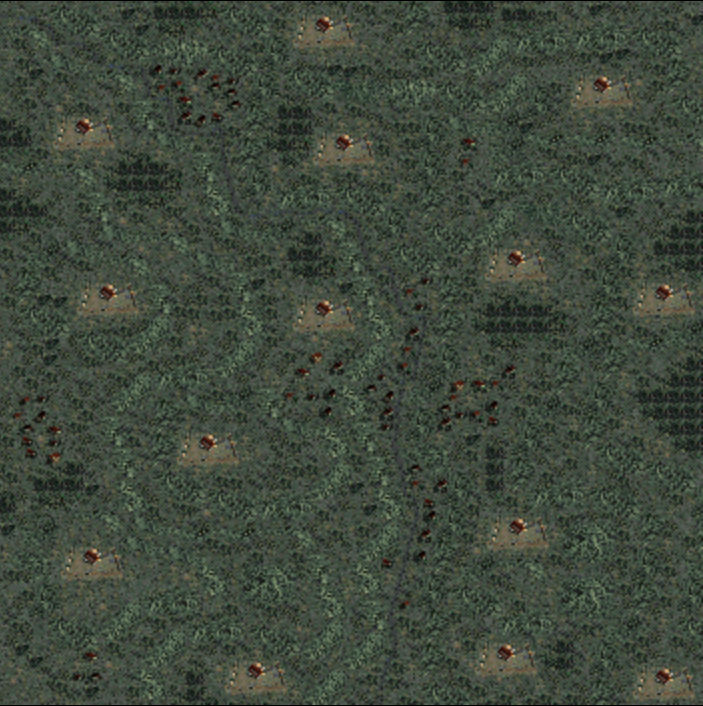

The unit assets are split up into three categories for each unit:

- The unit body, with a frame for each rotation.

- The unit turret, with a frame for each rotation.

- The unit shadow, again with many frames.

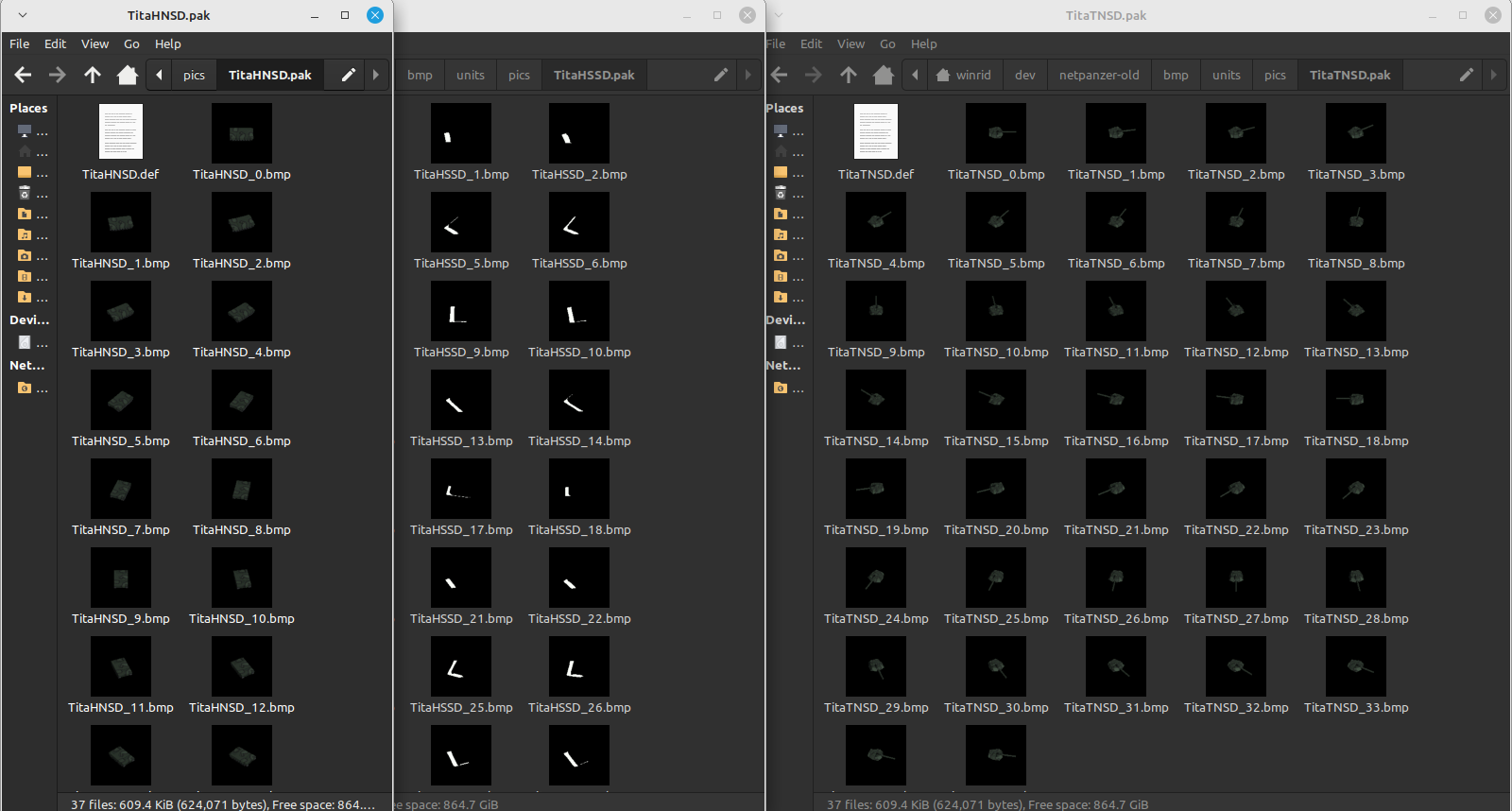

So while in game they look like:

They are actually stored like:

Map data. This is the hard part. There is no "dump the map data" script.

It turns out the actual tile images are stored in summer12mb.tls. As you could guess, this is a 12mb file.

The actual maps are quite small, because they are just pointers into offsets into this file.

It's quite a bit more complicated than that, however. I was hoping I could just get a list of bytes, after figuring out the

pointers into the palette, and dump it to a bit bitmap file for each map, and then convert this file to another more modern format.

It was not going to be quite this simple. :)

The map data is split across a few structures.

First, the .tls file itself starts with:

class TILE_DBASE_HEADER

{

public:

unsigned char netp_id_header[64];

unsigned short version;

unsigned short x_pix;

unsigned short y_pix;

unsigned short tile_count;

unsigned char palette[768];

}

The map tiles also have a list of this structure, which stores the move_value (how fast a unit can move, if at all. The game uses A*):

class TILE_HEADER

{

public:

char attrib;

char move_value;

char avg_color;

}

...and finally a uint8 of map_data.

Eventually I figured out how the game loads and renders maps, so I could stop reverse engineering the structure and create my own

map2bmp.cpp:

First, we need to "start the map loading", which takes a map name, whether or not to load the map tiles, and the

number of partitions to load it into:

MapInterface::startMapLoad(filename.c_str(), true, 1);

Then there is a function we are supposed to repeatedly call to load the map, which takes a pointer to an integer

that is updated to track the progress:

int percent_complete;

char strbuf[256];

while( MapInterface::loadMap( &percent_complete ) == true ) {

sprintf( strbuf, "Loading Game Data ... (%d%%)", percent_complete);

}

printf("Map loaded!\n");

Now let's create an SDL1 Surface to write the map to:

int total_width = MapInterface::getMap()->getWidth();

int total_height = MapInterface::getMap()->getHeight();

Surface unpacked(total_width, total_height, 1);

SDL_Surface *surf = SDL_CreateRGBSurfaceFrom(unpacked.getFrame0(),

unpacked.getWidth(),

unpacked.getHeight(),

8,

unpacked.getPitch(), // pitch

0,0,0,0);

if ( ! surf )

{

printf("surface is null!");

}

Load our palette and tell SDL about it:

Palette::loadACT(palettefile);

SDL_SetColors(surf, Palette::color, 0, 256);

Now we can clear our packed surface and write the tiles:

unpacked.fill(0);

for (int x = 0; x < MapInterface::getMap()->getWidth(); x++) {

for (int y = 0; y < MapInterface::getMap()->getHeight(); y++) {

// pak.setFrame(n);

blitTile(unpacked, MapInterface::getMap()->getValue(x, y), x + 32, y + 32);

}

}

The blitTile function was not easily accessible, as I could not get enough of the project to compile as the time to use it. Luckily

we can just copy it into our script:

void blitTile(Surface &dest, unsigned short tile, int x, int y)

{

PIX * tileptr = TileInterface::getTileSet()->getTile(tile);

int lines = 32;

int columns = 32;

if ( y < 0 )

{

lines = 32 + y;

tileptr += ((32-lines)*32);

y = 0;

}

if ( x < 0 )

{

columns = 32 + x;

tileptr += (32-columns); // advance the unseen pixels

x = 0;

}

PIX * destptr = dest.getFrame0();

destptr += (y * dest.getPitch()) + x;

if ( y + 32 > (int)dest.getHeight() )

{

lines = (int)dest.getHeight() - y;

}

if ( x + 32 > (int)dest.getWidth())

{

columns = (int)dest.getWidth() - x;

}

PIX * endptr = destptr + (lines * dest.getPitch());

for ( /* nothing */ ; destptr < endptr; destptr += dest.getPitch())

{

memcpy(destptr,tileptr,columns);

tileptr +=32;

}

}

This is quite a fun piece of code because it uses pointer arithmetic. Instead of working with an array, we simply get

an address of memory and offset the address as we copy the memory to our surface based on the "pitch" of bytes in the format, which

is number of bytes in between "rows". The array syntax in C is just sugar for doing this (why we don't use the sugar I don't know).

This is the final version of the code. It took a few iterations to get it right:

Our second attempt, a very blurry map:

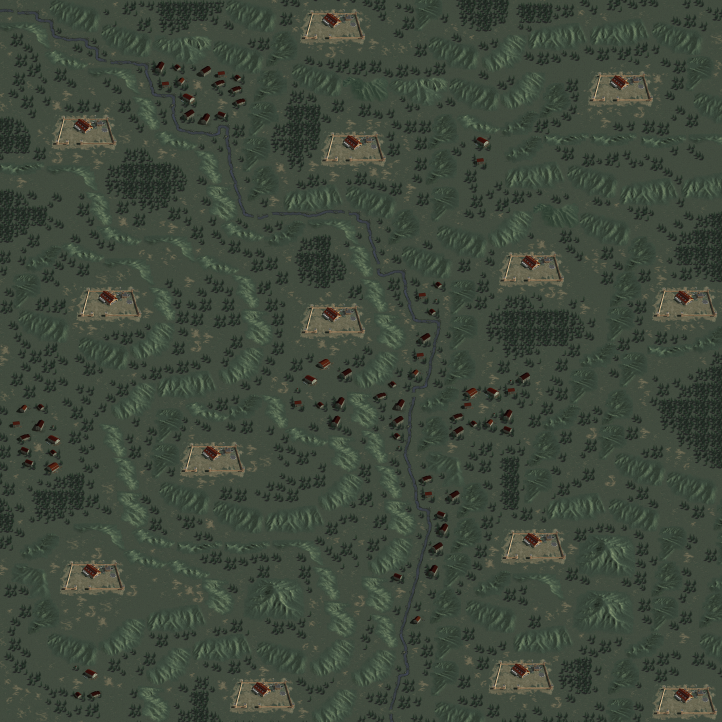

Then a higher quality map that is obviously messed up:

... and finally! The resulting image is uncompressed 11k x 11k pixels. It's 121 megabytes as a BMP file, or 47mb as a webp with no percieved loss of quality.

Click to enlarge.

Conclusion

I'll be happy to see a new community form around the game if we can add map creation and improve

the hosting story around the server, and maybe some central stats/prestige tracking.

It's neat to see what they did to compress the assets, whereas today we might just end up with a "tiny" 1GB game on steam. :)

![Route Obj Using float[] Route Obj Using float[]](images/tdw-route-1.png)